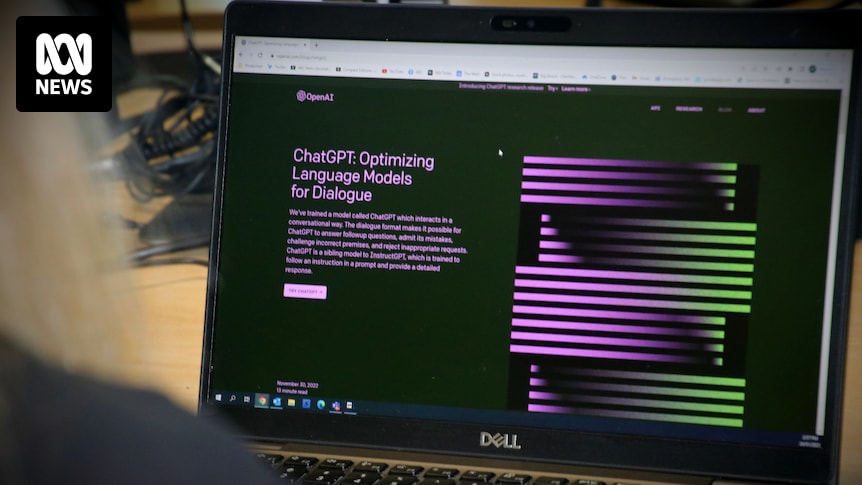

An alarming data breach involving the personal details of up to 3,000 individuals has sparked significant privacy concerns in New South Wales, Australia. The breach, which occurred when a contractor for the NSW Reconstruction Authority uploaded sensitive information to ChatGPT in March, underscores the potential risks posed by artificial intelligence to personal security.

The exposed data, which includes names, contact details, and health information of those connected to a flood recovery program, raises the question: can private information uploaded to AI platforms be accessed by other users? Experts in AI, data, and cybersecurity have weighed in on the implications of this breach.

Understanding the Data Breach

It remains uncertain what has happened to the data, but there is a possibility that it was included in the training material for ChatGPT. According to Dr. Aaron Snoswell, a senior research fellow in AI accountability at Queensland University of Technology, companies like OpenAI, which owns ChatGPT, regularly update their AI models using vast amounts of data, including user data—unless users opt out.

“They use a bunch of mathematics to try to force these models to try to appear intelligent by mimicking what it sees in that collection of data,” Dr. Snoswell explained.

Dr. Chamikara Mahawaga Arachchige, a senior research scientist at CSIRO’s Data61, noted that if personal information was inadvertently included in the training data, there is a small chance it could influence the system. The risk arises if users attempt to extract this information through sophisticated prompts.

Can Personal Data Be Retrieved?

Assuming the data breach information was used in ChatGPT’s training material, Dr. Snoswell suggests that it is theoretically possible for someone to retrieve it. However, he emphasizes that AI models do not store data like traditional computer files due to the enormous amount of information they process.

“It’s much more like a statistical soup of approximations,” he said, adding that the models aim to please users, which might lead to inaccurate responses.

Dr. Snoswell cautioned that while these models might attempt to comply with user requests, the information provided cannot always be trusted.

The Broader Implications of the Breach

This incident is not isolated. Australia has experienced several significant data breaches in recent years, including high-profile cases involving Optus and Medibank. Dr. Snoswell believes the Reconstruction Authority breach was accidental rather than malicious.

“The data has been uploaded to one unauthorized third party, rather than stolen by someone maliciously and then sold on to other parties or maybe used in a ransom situation,” he said.

Dr. M.A.P. Chamikara warns that the worst-case scenario would involve the data being copied and used for scams or identity theft, although this seems unlikely in the current case.

Protective Measures and Future Steps

The NSW Reconstruction Authority plans to contact those affected by the breach in the coming week. The authority is collaborating with Cyber Security NSW to monitor the internet and dark web for any signs of data exposure. At present, there is no evidence of third-party access.

For those concerned, ID Support NSW offers expert advice and resources for individuals affected by data breaches. Dr. M.A.P. Chamikara advises taking precautions such as changing passwords, enabling two-factor authentication, and monitoring financial activities for any unusual occurrences.

“Watch for suspicious emails, calls, or messages and never click on the links available in these emails or messages,” Dr. Chamikara recommended.

He also emphasized the importance of treating AI platforms as public forums, advising against sharing personal information with them.

Removing Data from AI Platforms

For those worried about their data on ChatGPT, OpenAI provides a function to request the removal of personal information. Users can access the OpenAI privacy center to make a personal data removal request.

“They’ll do their best to remove that data, potentially—but the catch here is that in Australia, our data laws are not as strong as some other countries,” Dr. Snoswell noted.

As AI technology continues to evolve, this incident serves as a stark reminder of the need for robust data protection measures and the importance of understanding the potential risks associated with emerging technologies.