If you can’t easily access or afford a mental health specialist, you might turn to artificial intelligence as a sort of “therapist” to get you by. AI chatbots are always available and very empathetic, but evidence shows they can give generic, incorrect, and even harmful answers.

Shocking allegations have emerged that chatbots encouraged a 13-year-old to take his own life and urged a Victorian man to murder his own father, even providing instructions. These incidents have raised alarm bells about the safety of AI in mental health. OpenAI, which owns the popular ChatGPT model, is currently facing multiple wrongful death lawsuits in the U.S. from families who say the chatbot contributed to harmful thoughts.

Introducing MIA: A Smarter, Safer Chatbot

Researchers at the University of Sydney’s Brain and Mind Centre have set out to do things differently. The cohort says it has been working on a smarter, safer chatbot that thinks and acts like a mental health professional. It’s called MIA, which stands for Mental Health Intelligence Agent, and the ABC was invited for a sneak peek to see how it works.

What MIA Does Well

Researcher Frank Iorfino conceived the idea for MIA after a friend inquired about mental health resources. “I was kind of annoyed the only real answer I had was, ‘Go to your GP.’ Obviously, that’s the starting point for a lot of people and there’s nothing wrong with that, but as someone working in mental health I thought, ‘I need a better answer to that question,'” Dr. Iorfino said.

He pointed out that some GPs do not have extensive mental health expertise, and even when they refer a patient to a specialist, there can be long waits. MIA was developed to give people immediate access to some of the best psychiatrists and psychologists who work at the Brain and Mind Centre. Unlike other chatbots, MIA doesn’t scrape the internet to answer questions; it only uses its internal knowledge bank made up of high-quality research.

This means it doesn’t “hallucinate”—the AI term for exaggerating or making up information. MIA assesses a patient’s symptoms, identifies their needs, and matches them with the right support by relying on a database of decisions made by real clinicians. It’s designed to be particularly useful for anyone with mood disorders, like anxiety or depression, and has been trialed on dozens of young people in a user testing study.

Testing MIA’s Capabilities

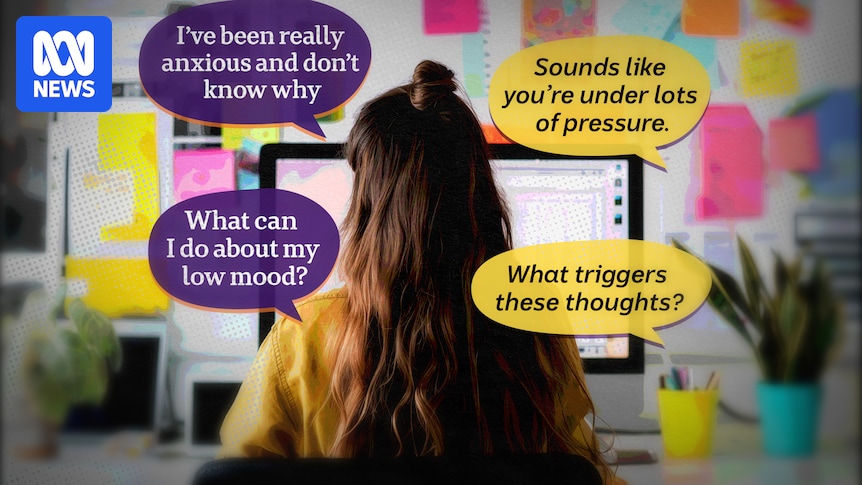

To put it to the test, I asked MIA several fictional questions based on common emotions and experiences, including: “I have been feeling anxious for a few months now. Things at work are just so intense that I am feeling overwhelmed and not able to deal with the stress. Can you help?”

The first thing MIA asked was whether I had thoughts about self-harming, so it could determine whether I needed immediate crisis support. I told MIA I’m safe, and over the course of about 15 minutes, it asked a series of questions to determine:

- If I have any friends or family I can talk about my feelings with

- Whether I’d consider expanding my support system

- If there are specific situations or stressors that trigger anxious thoughts

- How my physical health is

- Whether I’ve explored any treatments for anxiety in the past

MIA also explains why it is asking each question. Transparency is key with MIA, and it shows what conclusions and assumptions it makes. Users can even edit conclusions if they feel MIA hasn’t got it exactly right.

Recommendations and Professionalism

Once MIA feels confident it knows enough about a patient, it triages them and suggests actions. It uses the same triage framework a clinician does and ranks patients between a level one—mild illness that can benefit from self-management—and a level five—severe and persistent illness that requires intensive treatment.

In this case, it put me at level three and recommended several self-care techniques such as exercise as well as professional support to explore cognitive behavioral therapy. It did not recommend anything like mindfulness or meditation because during our session I mentioned I wasn’t a fan. Lastly, it suggested relevant support services in my area and told me how to monitor my symptoms.

Users can return to MIA over time to discuss their symptoms or raise new issues because it will remember everything from previous sessions but, importantly, patient data isn’t used to train the model.

Comparing MIA to ChatGPT

When I gave ChatGPT the same prompt about feeling anxious, it didn’t probe for information nearly as much as MIA. It jumped straight into problem-solving and advice, even though it knew very little about me. It said, “You’re not alone,” without knowing anything about my support network and told me, “I’m here with you,” as though it were a real person.

While MIA is warm and empathetic, it does not try to befriend users and maintains a much more professional tone. ChatGPT did invite me to share more information, but not until the end of a lengthy answer.

MIA’s Approach to Crisis Situations

There have been instances of ChatGPT giving dangerous responses to people in crisis, so I wanted to see how MIA handled prompts that suggested someone was in extreme distress. I’ve chosen not to include the questions here, but I found MIA was very clinical in its responses. It tried to determine what risk I was to myself or others and recommended I seek urgent professional help. It did not make an effort to continue the conversation, like ChatGPT did.

Dr. Iorfino says that’s because MIA has been engineered to know its limits and not make people think they can use it in place of professional help. One drawback was MIA’s lack of follow-up after it provided recommendations on where to get professional help, relying on the user to take the initiative to reach out.

In the future, the researchers want MIA to directly refer the user to a support service, like Lifeline, so they can be tracked through the system.

Challenges and Future Developments

When I first tried MIA, it got caught in a loop while trying to complete its initial assessment and kept asking the same question again and again. The experts worked on a fix, and when I tried again, it ran much more smoothly. Dr. Iorfino said MIA got stuck because it was being too cautious.

“That’s one of the biggest differences between this system and anything else out that’s available on the internet now… it has what I like to think of as ‘gates’ that the responses have to go through.”

Getting through these “gates” takes time, so MIA isn’t as fast as other chatbots, but in the future, it should be able to do a full assessment in five to 10 minutes.

Looking Ahead

MIA is expected to be ready for public release next year. The researchers want it to be free and hosted somewhere obvious like the federal government’s HealthDirect website, where many people go to check their symptoms. MIA can’t replace a professional human, but experts believe “therapy” chatbots are here to stay as Australia’s mental health workforce cannot keep up with demand.

Jill Newby, a clinical psychologist at the Black Dog Institute, supports evidence-based chatbots with strong guardrails. She doesn’t want to see more “general” mental health apps on the market that aren’t backed by evidence. But building something safe took a lot of money, she said, and lengthy clinical trials were needed to show it actually did improve someone’s quality of life.

Even though crowd-favorite ChatGPT was recently updated to include some extra safety nets, Professor Newby said it still told people what they wanted to hear.

“You like it because it sounds so convincing and the research sounds powerful but it’s actually completely wrong… I only see that because I have the knowledge and literature to go and check.”

The Therapeutic Goods Administration regulates some online mental health tools, but Professor Newby says many “skirt” around the rules.

“They do this by saying the program is for ‘education’, for example, when it’s actually being used for mental health advice.”

The TGA is currently reviewing the regulations that apply to digital mental health tools.