New York, NY—Jan. 14, 2026—In a groundbreaking development, a team from Columbia Engineering has announced the creation of a robot capable of mastering lip syncing, a significant leap forward in human-robot interaction. This advancement addresses a long-standing challenge in robotics: the ability to mimic human facial expressions accurately, particularly lip movements, which are crucial for effective communication.

The announcement comes as robots increasingly integrate into everyday life, yet still struggle with the subtleties of human-like interaction. Despite advancements in robotics, humanoid robots often exhibit limited facial expressiveness, resulting in what is known as the “Uncanny Valley” effect, where their appearance and movements are perceived as eerie or unsettling.

Overcoming the Uncanny Valley

Humans naturally focus on lip motion during face-to-face conversations, attributing significant importance to facial gestures. While we may overlook awkward body movements, we are less forgiving of facial inaccuracies. This has been a major hurdle for humanoid robots, which often appear lifeless due to their inability to mimic human lip movements convincingly.

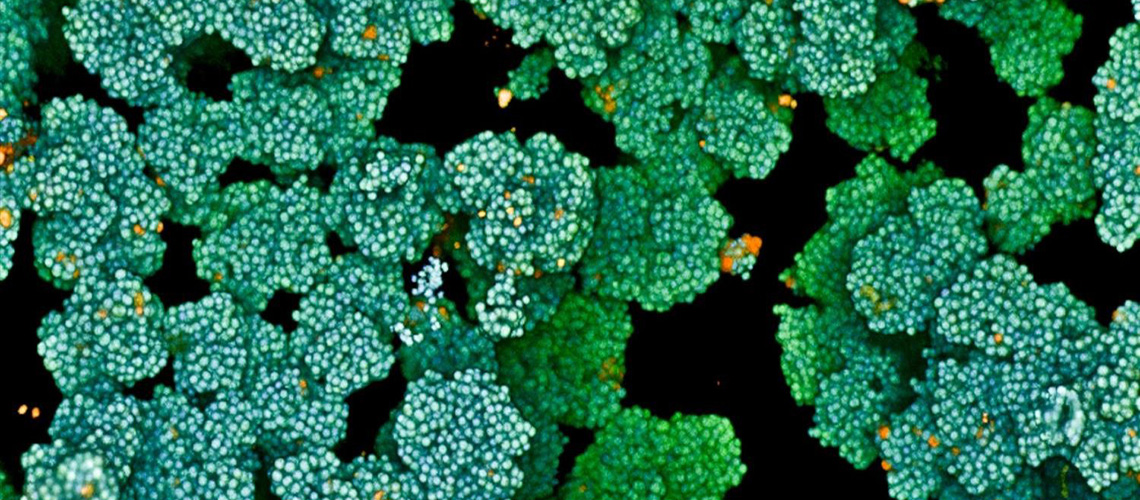

The Columbia Engineering team, led by Hod Lipson, James and Sally Scapa Professor of Innovation, has developed a robot that learns lip motion through observational learning. As detailed in their study published in Science Robotics, the robot uses a combination of mirror observation and video analysis to improve its lip-syncing capabilities.

Learning Through Observation

The robot’s journey to mastering lip syncing began with it observing its own reflection, akin to a child experimenting with facial expressions. Equipped with 26 facial motors, the robot explored various facial gestures, gradually learning to control its motors to replicate specific expressions. This process, known as a “vision-to-action” language model (VLA), allowed the robot to develop a nuanced understanding of facial dynamics.

Subsequently, the robot was exposed to hours of YouTube videos featuring human speech and singing. By analyzing these videos, the robot’s AI learned to associate audio with corresponding lip movements, enabling it to sync its lips with a variety of sounds and languages.

“The more it interacts with humans, the better it will get,” promised Hod Lipson, emphasizing the robot’s potential for continuous improvement.

Implications for Human-Robot Interaction

This development follows a decade-long effort by Lipson and his team to enhance robot communication through facial gestures. The ability to sync lips accurately is seen as a crucial component of more holistic robotic communication, complementing conversational AI technologies like ChatGPT or Gemini.

Yuhang Hu, the study’s lead researcher, highlighted the potential for robots to form deeper connections with humans. “When the lip sync ability is combined with conversational AI, it adds a whole new depth to the connection the robot forms with the human,” Hu explained. The robot’s ability to imitate nuanced facial gestures could significantly enhance its emotional engagement with users.

The Future of Humanoid Robotics

As humanoid robots find applications in diverse fields such as entertainment, education, medicine, and elder care, the importance of lifelike facial expressions cannot be overstated. Lipson and Hu predict that warm, expressive faces will become essential for robots designed to interact with humans.

“There is no future where all these humanoid robots don’t have a face. And when they finally have a face, they will need to move their eyes and lips properly, or they will forever remain uncanny,” Lipson estimates.

Economists forecast the production of over a billion humanoid robots in the next decade, underscoring the urgency of overcoming the Uncanny Valley. The ability to sync lips effectively is seen as a critical step toward achieving this goal.

Challenges and Considerations

Despite the promising advancements, the researchers acknowledge the challenges that remain. The robot still struggles with certain sounds, such as ‘B’ and ‘W’, which involve complex lip movements. However, Lipson is optimistic about future improvements as the robot continues to learn and adapt.

Beyond technical hurdles, the ethical implications of increasingly lifelike robots are a topic of ongoing debate. Lipson stresses the need for cautious development to ensure that the benefits of this technology are realized while minimizing potential risks.

“This will be a powerful technology. We have to go slowly and carefully, so we can reap the benefits while minimizing the risks,” Lipson said.

As researchers continue to explore the potential of robotic facial expressions, the quest to bridge the gap between human and robot interaction remains a dynamic and evolving field.