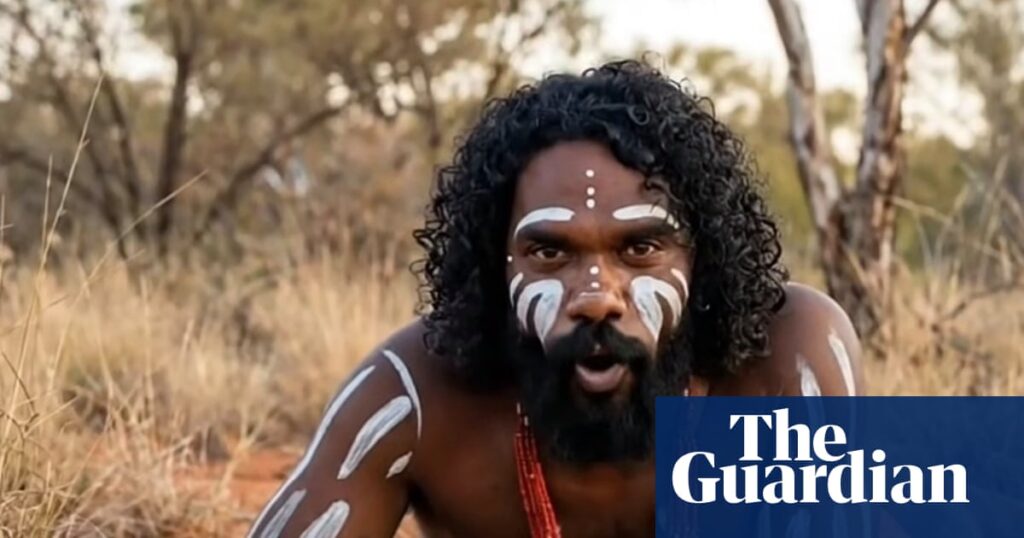

In the heart of the Australian outback, a charismatic figure known as Jarren captivates thousands with his adventurous spirit and passion for wildlife. With dark curls and brown eyes, he navigates the rugged terrain, sharing tales of venomous snakes and elusive night parrots. Yet, the man hailed as the “Aboriginal Steve Irwin” is not real. Jarren, the star of the social media account “Bush Legend,” is an AI-generated character created in New Zealand.

Since its inception in October 2025, the Bush Legend account has amassed a significant following, boasting 90,000 followers on Instagram and 96,000 on Facebook. The account, which originally shared satirical news content, now claims to focus on raising awareness about Australian wildlife. However, the revelation that Jarren is an AI creation has sparked a wave of ethical concerns and debates about cultural representation.

The Ethical Debate: Cultural Appropriation and AI

The creation of an Indigenous avatar has drawn criticism from cultural experts and advocates. Dr. Terri Janke, an authority on Indigenous cultural and intellectual property, describes the AI-generated content as “remarkable” in its realism but warns of the risks of “cultural flattening.” She questions the ethical implications of using AI to mimic Indigenous identities without consent.

“It’s theft that is very insidious in that it also involves a cultural harm,” Janke says. “Because of the discrimination … the impacts of stereotypes and negative thinking, those impacts do hit harder.”

Tamika Worrell, a senior lecturer in critical Indigenous studies at Macquarie University, labels the AI avatar as a form of “digital blackface.” She highlights the dangers of cultural appropriation, where non-Indigenous creators use AI to craft Indigenous caricatures without accountability to the communities represented.

The Role of AI in Cultural Representation

The controversy surrounding Bush Legend underscores the broader challenges posed by AI in cultural representation. AI-generated content can perpetuate stereotypes and biases inherent in the data used for training. Toby Walsh, a professor of artificial intelligence, emphasizes that AI systems are not immune to these biases.

“They are going to carry the biases of that training data,” Walsh explains. “Certain groups may be stereotyped because the video data or the image data that exists in that group online is somewhat stereotypical. So we’re going to perpetuate that stereotype moving forwards.”

Walsh also notes the increasing difficulty in distinguishing AI-generated content from reality, a trend that complicates the public’s ability to discern truth from fabrication.

Implications for Digital Literacy and Cultural Sensitivity

The Bush Legend account’s response to criticism has been dismissive, with the avatar stating that the page is “simply about animal stories” and encourages viewers to “scroll on” if they dislike the content. This stance raises questions about the responsibilities of content creators in addressing cultural sensitivity and the potential harm of their creations.

Experts argue that digital literacy is crucial in navigating the complexities of AI-generated content. As AI technology becomes more sophisticated, the “tells” that once helped identify fake content are becoming less apparent, making it imperative for users to approach digital media with a critical eye.

The debate over Bush Legend highlights the need for ethical guidelines and legislative measures to govern the use of AI in cultural contexts. As AI continues to evolve, the conversation around its impact on marginalized communities and cultural representation will remain a pressing issue.

Meta, the platform hosting the Bush Legend account, has yet to comment on the controversy. Meanwhile, the account’s creator, believed to be a South African residing in New Zealand, has not responded to inquiries.

As the lines between reality and digital fabrication blur, the Bush Legend case serves as a cautionary tale about the power and pitfalls of AI in shaping cultural narratives.