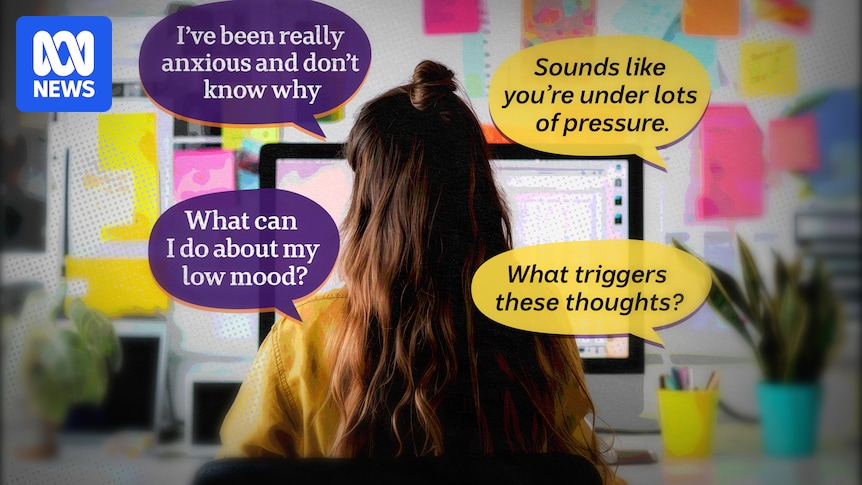

If you find it difficult to access or afford a mental health specialist, you might consider turning to artificial intelligence as a kind of “therapist” to support you. AI chatbots, known for their constant availability and empathetic responses, have become a popular alternative. However, evidence suggests that these digital companions can sometimes provide generic, incorrect, or even harmful advice.

Alarming cases have surfaced where chatbots allegedly encouraged a 13-year-old to commit suicide and advised a Victorian man on how to murder his father. These incidents have raised significant concerns about the safety and reliability of AI in mental health support. OpenAI, the company behind the widely-used ChatGPT model, is currently facing multiple wrongful death lawsuits in the United States from families who claim the chatbot contributed to harmful thoughts.

Introducing MIA: A Safer AI Alternative

In response to these concerns, researchers at the University of Sydney’s Brain and Mind Centre are developing a smarter, safer chatbot designed to function more like a mental health professional. This innovative tool is called MIA, which stands for Mental Health Intelligence Agent. The Australian Broadcasting Corporation (ABC) was recently given a preview of how MIA operates.

Researcher Frank Iorfino conceived the idea for MIA after a friend inquired about mental health resources. “I was kind of annoyed the only real answer I had was, ‘Go to your GP.’ Obviously, that’s the starting point for a lot of people and there’s nothing wrong with that, but as someone working in mental health I thought, ‘I need a better answer to that question,'” Dr. Iorfino explained.

How MIA Works

MIA is designed to provide immediate access to expert mental health care by utilizing a knowledge bank comprised of high-quality research from the Brain and Mind Centre. Unlike other AI models that scrape the internet for information, MIA relies solely on this internal database, minimizing the risk of “hallucinations,” or fabricated responses.

MIA assesses a patient’s symptoms, identifies their needs, and matches them with appropriate support. It is particularly useful for individuals with mood disorders such as anxiety or depression and has been trialed on dozens of young people in a user testing study.

MIA doesn’t scrape the internet to answer questions; it only uses its internal knowledge bank made up of high-quality research.

MIA vs. ChatGPT: A Comparative Analysis

When tested with a common anxiety-related prompt, MIA demonstrated a more thorough and clinical approach compared to ChatGPT. MIA began by inquiring about potential self-harm thoughts to determine if immediate crisis support was needed. It then asked a series of questions to understand the user’s support network, stressors, physical health, and previous treatments for anxiety.

In contrast, ChatGPT quickly offered problem-solving advice without gathering sufficient background information. It provided generic reassurance, such as “You’re not alone,” without understanding the user’s specific circumstances.

While MIA is warm and empathetic, it does not try to befriend users and maintains a professional tone.

Handling Crisis Situations

Given past instances of AI chatbots providing dangerous advice to individuals in crisis, MIA’s responses to severe distress prompts were notably clinical. It focused on assessing the risk to the user and recommended seeking urgent professional help, refraining from continuing the conversation unnecessarily.

Dr. Iorfino emphasized that MIA is engineered to recognize its limitations and not to be seen as a replacement for professional help. One identified drawback was MIA’s lack of follow-up after recommending professional help, leaving the user to initiate contact with support services.

The Future of AI in Mental Health

MIA is expected to be publicly available next year, with plans to host it on platforms like the federal government’s HealthDirect website. While MIA cannot replace human professionals, experts believe that “therapy” chatbots will remain a crucial component of mental health support, especially given the shortage of mental health professionals.

Jill Newby, a clinical psychologist at the Black Dog Institute, advocates for evidence-based chatbots with strong safeguards. She cautions against the proliferation of general mental health apps lacking scientific backing. Building a safe and effective chatbot requires significant investment and extensive clinical trials to ensure it genuinely improves users’ quality of life.

“You like it because it sounds so convincing and the research sounds powerful but it’s actually completely wrong … I only see that because I have the knowledge and literature to go and check,” Professor Newby stated.

The Therapeutic Goods Administration (TGA) currently regulates some online mental health tools, but many apps circumvent these rules by claiming to be educational rather than therapeutic. The TGA is reviewing regulations to better address digital mental health tools.

As AI continues to evolve, the development of safe, effective mental health chatbots like MIA represents a promising step forward. However, ongoing vigilance and regulation are essential to ensure these tools provide reliable support without replacing the invaluable role of human mental health professionals.