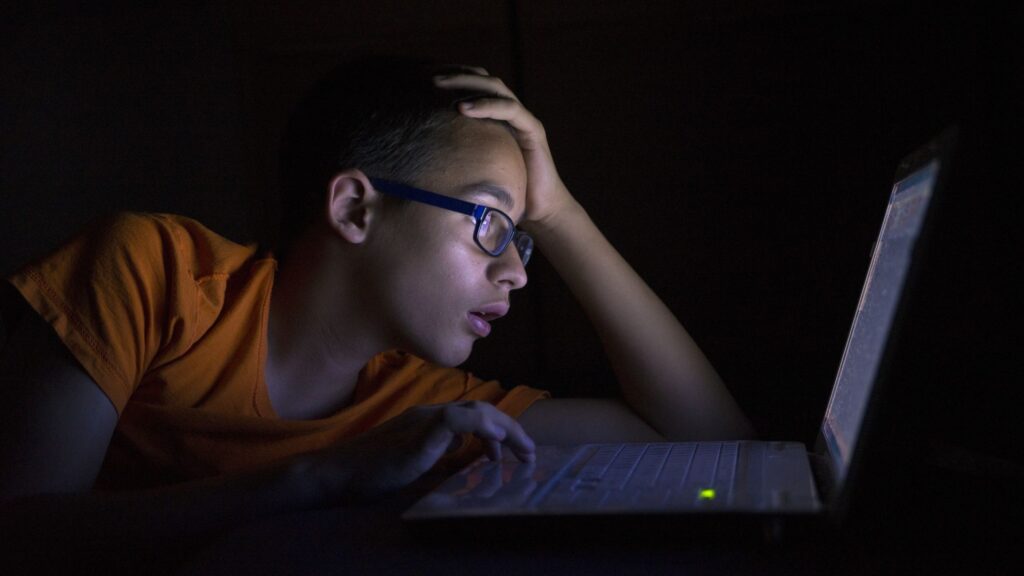

Generative AI has rapidly evolved, reaching unprecedented heights across various fields, including computing, education, and medicine. However, the technology’s progress is now at risk due to a phenomenon known as “brain rot,” which threatens to undermine the very fabric of the internet. This concern was recently highlighted by a study from Cornell University, which warns that prolonged exposure to low-quality online data is causing a decline in the cognitive capabilities of large language models (LLMs).

The study’s findings are particularly alarming for top AI labs such as Anthropic, OpenAI, and Google, which rely heavily on human-generated content from the internet to train their LLMs. Last year, reports indicated that these companies were struggling to find high-quality content for training, a challenge that continues to hinder AI development. The Cornell study elaborates on this issue, revealing that LLMs experience “brain rot” when exposed to poor-quality data, leading to diminished accuracy and comprehension.

Understanding “Brain Rot” in AI

The concept of “brain rot” in AI mirrors similar concerns in humans, where constant exposure to trivial and low-quality content adversely affects cognitive functions such as reasoning and focus. In the case of AI, this exposure results in models that are less reliable and more prone to errors. The Cornell researchers assessed internet junk content using two measures: engagement with short, viral posts and semantic quality, focusing on posts with a clickbait writing style.

By constructing datasets with varying proportions of junk and high-quality content, the researchers evaluated the impact on LLMs like Llama 3 and Qwen 2.5. The goal was to understand how continuous reliance on low-quality web content affects AI systems, which are increasingly inundated with short, viral, or machine-generated content.

Impact on AI Performance

The study’s results are concerning. AI models trained on junk content saw their accuracy plummet from 74.9% to 57.2%, while their long-context comprehension capabilities dropped from 84.4% to 52.3%. This decline is attributed to a “dose-response effect,” where prolonged exposure to low-quality content exacerbates cognitive and comprehension issues in AI models.

“The accuracy of AI models using junk content fell from 74.9% to 57.2%.”

Moreover, the study found that this exposure negatively impacted the models’ ethical consistency, leading to a “personality drift” that made them more likely to generate incorrect responses. The models’ thought processes were also affected, often bypassing step-by-step reasoning and resulting in superficial responses.

The Emergence of the “Dead Internet Theory”

This development aligns with the “dead internet theory,” a concept gaining traction among tech industry leaders. Figures like Reddit co-founder Alexis Ohanian and OpenAI CEO Sam Altman have voiced concerns about the internet’s current state, dominated by bots and quasi-AI. Ohanian predicts a future where the next generation of social media will be verifiably human, while Altman suggests that many online accounts are managed by LLMs.

Last year, a study by Amazon Web Services (AWS) researchers found that 57% of online content is AI-generated or translated using AI algorithms, which has deteriorated the quality of search results. Former Twitter CEO Jack Dorsey has also warned about the increasing difficulty in distinguishing real from fake content due to advancements in image creation, deep fakes, and videos.

“57% of content published online is AI-generated or translated using an AI algorithm.”

Implications and Future Outlook

The implications of these findings are significant. As AI continues to play a crucial role in shaping the internet, the quality of content used for training these models becomes paramount. Without access to high-quality data, AI systems risk becoming unreliable and less effective, potentially leading to a “dead internet” where genuine human interaction is overshadowed by automated content.

Moving forward, experts suggest that AI developers prioritize the curation of high-quality training data and consider implementing measures to filter out low-quality content. This approach could help mitigate the risks associated with “brain rot” and ensure the continued advancement of AI technology.

As the digital landscape evolves, the responsibility falls on both tech companies and users to maintain the integrity of the internet. By fostering an environment that values quality over quantity, the industry can work towards a future where AI enhances rather than detracts from the online experience.