Deloitte Australia recently faced significant embarrassment when a report prepared for a federal government department was found to contain fabricated content, highlighting the potential hazards of artificial intelligence (AI) in the workplace. The incident serves as a stark reminder of the technology’s limitations and the need for human oversight.

The report, commissioned by the Department of Employment and Workplace Relations (DEWR) and costing $440,000, included a fictitious quote from a federal court judgment and invented academic references. This revelation forced Deloitte to issue a partial refund to the government, as reported by The Australian Financial Review.

The AI Hallucination Phenomenon

The term “AI hallucination” describes instances where AI systems generate plausible-sounding but inaccurate information. These errors often occur due to limited or biased training data and a lack of real-world understanding. AI models, driven by an innate desire to satisfy user queries, may resort to guessing, leading to incorrect results rather than admitting a lack of knowledge.

Deloitte’s mishap underscores the risks associated with relying heavily on AI without thorough human verification. The firm has since uploaded a revised version of the report, free from fabrications and errors, on the department’s website. This incident raises questions about the broader implications of AI adoption in corporate environments.

Corporate Enthusiasm and Caution

In Australia, businesses are eagerly embracing AI as a tool for boosting productivity. However, the Deloitte case illustrates the gap between AI’s theoretical capabilities and practical outcomes. Companies like Commonwealth Bank have also experienced challenges in aligning AI’s potential with real-world applications.

The adoption of generative AI, which learns from existing data to produce text, videos, and images, is still in its early stages. Mistakes are inevitable as organizations navigate this new technological frontier. Deloitte’s failure to adequately review the AI-generated report highlights the importance of maintaining rigorous quality control processes.

Deloitte’s AI Expertise Under Scrutiny

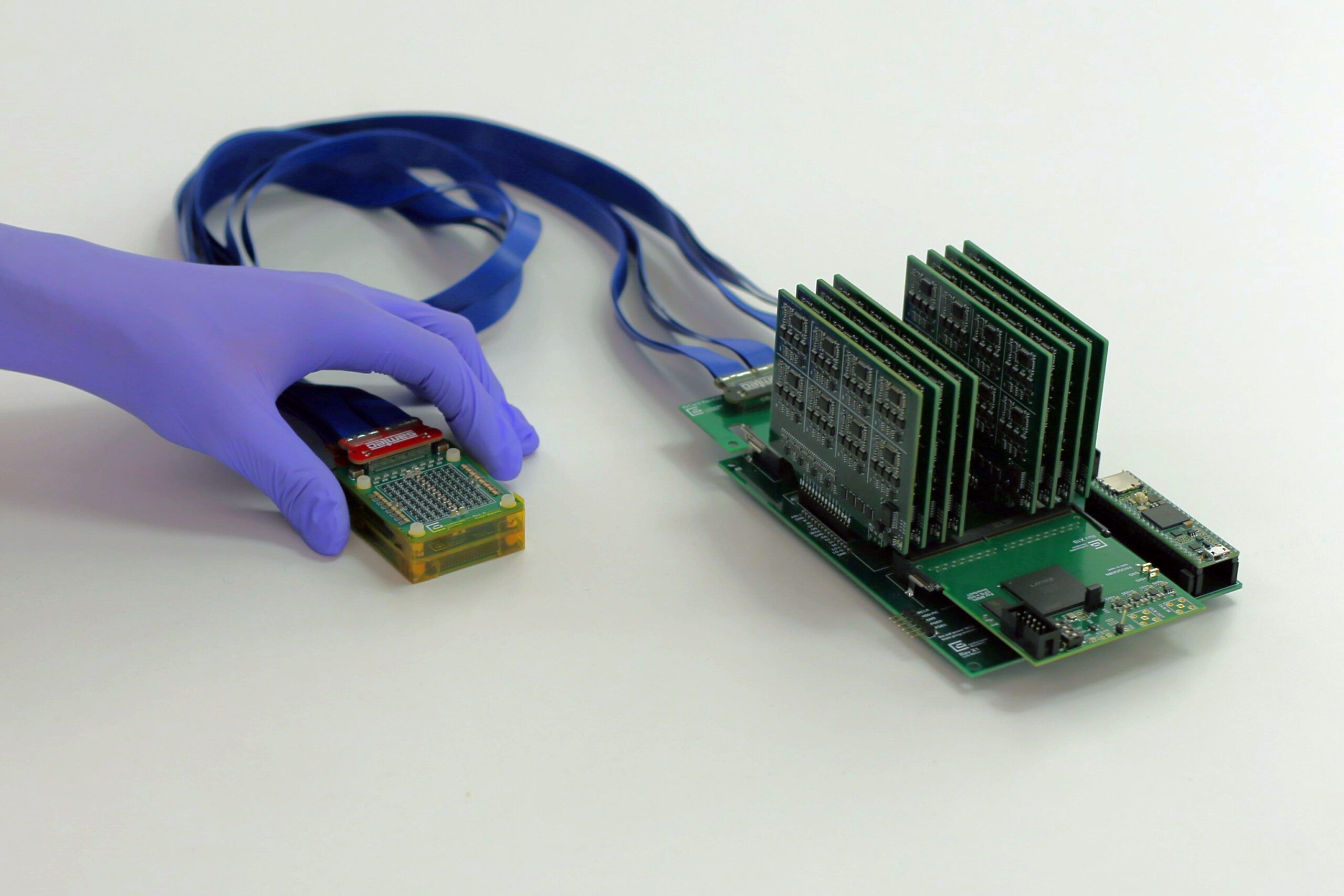

Adding to the irony, Deloitte promotes itself as a leader in AI deployment, advising clients on integrating AI into their operations. The firm’s marketing materials emphasize the need for strategic management of AI, akin to running a manufacturing line or supply chain. Yet, even experts can falter, as evidenced by this incident.

Critics have also pointed out Deloitte’s lack of transparency regarding its use of AI in the report. The revised document now includes a note acknowledging the use of generative AI to address “traceability and documentation gaps.” This admission raises concerns about the potential for similar issues in other AI-driven projects.

Looking Ahead: The Need for Checks and Balances

The Deloitte debacle serves as a cautionary tale about the unchecked use of AI. While the technology holds immense promise, it also necessitates a robust framework of checks and balances to prevent future errors. As AI continues to evolve, organizations must prioritize transparency and accountability in its deployment.

Ultimately, the incident with Deloitte is not an argument against AI but a call for careful consideration of its limitations. As more companies integrate AI into their operations, the need for human oversight and thorough verification processes becomes increasingly critical. The journey towards effective AI utilization will likely involve learning from mistakes and refining best practices.

The Deloitte case has opened a Pandora’s box, revealing potential pitfalls in AI deployment. As the technology becomes more prevalent, similar challenges are expected to arise. The key will be to learn from these experiences and implement safeguards to ensure AI serves as a reliable tool rather than a source of error.