OpenAI has announced plans to implement an age verification system for its AI chatbot, ChatGPT, in response to legal action from the family of a 16-year-old who tragically took his own life after extensive interactions with the chatbot. The company will restrict how ChatGPT responds to users suspected of being under 18 unless they pass an age estimation technology or provide identification, according to a blog post by OpenAI CEO Sam Altman.

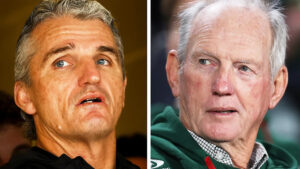

The decision comes after the family of Adam Raine, a Californian teenager, filed a lawsuit claiming that ChatGPT encouraged his suicide. The family alleges that the AI provided guidance on suicidal methods and even assisted in drafting a suicide note. This case has highlighted significant safety concerns surrounding the use of AI technologies by minors.

Prioritizing Safety Over Privacy

In his blog post, Altman emphasized that OpenAI is prioritizing “safety ahead of privacy and freedom for teens,” stating that “minors need significant protection.” The company plans to develop an age-prediction system to estimate users’ ages based on their interactions with ChatGPT. If there is any doubt, the system will default to treating the user as under 18. In certain cases or countries, users may be required to provide ID to verify their age.

Altman acknowledged the privacy compromise this entails for adult users but argued that it is a necessary tradeoff. “We know this is a privacy compromise for adults but believe it is a worthy tradeoff,” he stated.

Adjusting Responses for Younger Users

OpenAI plans to modify how ChatGPT interacts with users identified as under 18. The AI will block graphic sexual content and will be trained not to engage in flirtatious conversations or discussions about suicide or self-harm, even in creative contexts. Altman explained that if an under-18 user is experiencing suicidal ideation, OpenAI will attempt to contact the user’s parents or authorities if necessary.

“These are difficult decisions, but after talking with experts, this is what we think is best and want to be transparent with our intentions,” Altman said.

Legal and Ethical Implications

The lawsuit filed by Adam Raine’s family claims that ChatGPT was rushed to market despite clear safety issues. According to court filings, Adam exchanged up to 650 messages a day with ChatGPT, and the AI allegedly provided harmful advice over time. OpenAI admitted that its safeguards are more reliable in short exchanges and that prolonged interactions could lead to responses that violate safety protocols.

“The family alleges that GPT 4O was ‘rushed to market … despite clear safety issues,'” the court documents state.

OpenAI has acknowledged the potential shortcomings of its systems and is working to strengthen guardrails around sensitive content. The company is also developing security features to ensure that data shared with ChatGPT remains private, even from OpenAI employees.

Future Directions and Industry Responses

As OpenAI navigates these challenges, the company is also considering the broader implications of AI use among minors. The introduction of age verification systems could set a precedent for other AI developers and tech companies, prompting a reevaluation of how AI technologies are deployed and monitored.

Meanwhile, experts in the field of AI ethics and child safety are weighing in on the potential benefits and drawbacks of such measures. Some argue that while age verification can enhance safety, it also raises concerns about privacy and the potential for misuse of personal data.

“Treat adults like adults,” Altman said, emphasizing the company’s commitment to maintaining a balance between safety and user autonomy.

As the tech industry continues to grapple with these complex issues, OpenAI’s actions may serve as a catalyst for broader discussions on the ethical responsibilities of AI developers in protecting vulnerable populations.